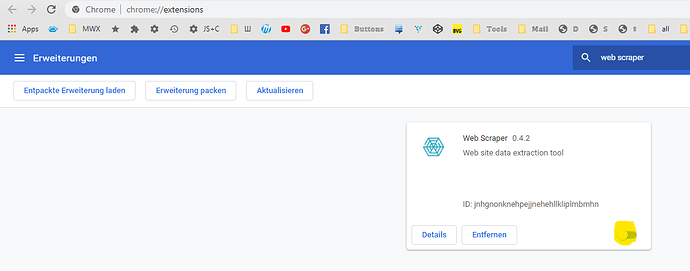

Web Scraper version: 0.4.2

Chrome version: 79.0.3945.117 (64-Bit)

OS: Win10x64

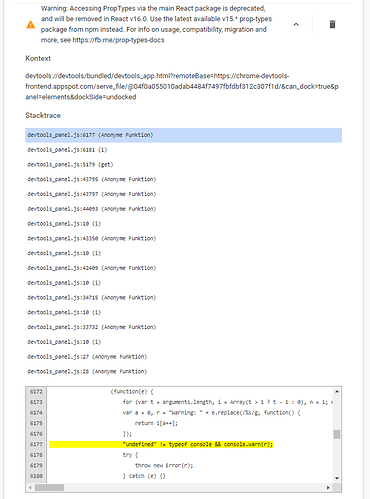

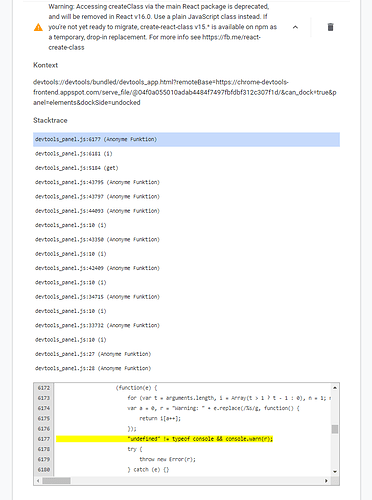

It is enough to activate Web Scraper and Chrome crashs repeatedly after 2-10 minutes since browser start. There are some sitemaps inside Web Scraper, but no scrape runs. As i said, it is enough just to activate Web Scraper to make Chrome crashing. It is definitely WebScraper - tested Chrome with single active extension.