Scraper crash with large dataset

When Scraper starts up, it start generate statistics, and when the underlying data is large enough it runs out of memory and crashes.

The solution would be to not try to fit the entire database into memory.

This problem prevents Scraper from starting up, and you need to "hack" the scraper code with the dev tools to get it running.

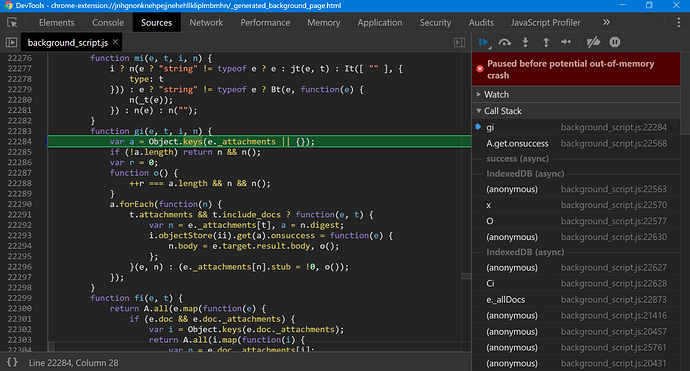

Update 1: Screen shoot & Stack trace from crash

This shows how the function reportStats (call stack level 5) is the top level function for this crash.

Screen shoot

Stack trace

gi (background_script.js:22284)

A.get.onsuccess (background_script.js:22568)

success (async)

IndexedDB (async)

(anonymous) (background_script.js:22563)

x (background_script.js:22570)

O (background_script.js:22577)

(anonymous) (background_script.js:22630)

IndexedDB (async)

(anonymous) (background_script.js:22627)

Ci (background_script.js:22628)

e._allDocs (background_script.js:22873)

(anonymous) (background_script.js:21416)

(anonymous) (background_script.js:20457)

(anonymous) (background_script.js:25761)

(anonymous) (background_script.js:20431)

(anonymous) (background_script.js:20425)

(anonymous) (background_script.js:25761)

(anonymous) (background_script.js:20459)

Qe.execute (background_script.js:21498)

Qe.ready (background_script.js:21502)

(anonymous) (background_script.js:21097)

(anonymous) (background_script.js:22714)

(anonymous) (background_script.js:22718)

(anonymous) (background_script.js:22975)

u (background_script.js:18396)

characterData (async)

i (background_script.js:18376)

e.exports (background_script.js:18402)

(anonymous) (background_script.js:22974)

(anonymous) (background_script.js:23089)

(anonymous) (background_script.js:22711)

wi (background_script.js:22635)

(anonymous) (background_script.js:22722)

Ti (background_script.js:22723)

Ke (background_script.js:21087)

getSitemapDataDb (background_script.js:20247)

(anonymous) (background_script.js:20308)

(anonymous) (background_script.js:20224)

n (background_script.js:20204)

getSitemapData (background_script.js:20307)

(anonymous) (background_script.js:20039)

o (background_script.js:19706)

Promise.then (async)

c (background_script.js:19721)

o (background_script.js:19706)

Promise.then (async)

c (background_script.js:19721)

o (background_script.js:19706)

Promise.then (async)

c (background_script.js:19721)

(anonymous) (background_script.js:19723)

n (background_script.js:19703)

getDatabaseStats (background_script.js:19878)

(anonymous) (background_script.js:20046)

(anonymous) (background_script.js:19723)

n (background_script.js:19703)

getStats (background_script.js:20044)

(anonymous) (background_script.js:20110)

(anonymous) (background_script.js:19723)

n (background_script.js:19703)

reportStats (background_script.js:20109)

(anonymous) (background_script.js:20095)

(anonymous) (background_script.js:19723)

n (background_script.js:19703)

(anonymous) (background_script.js:20092)