Now, I have about 1400 store links and their names, and I want to save them in 1400 files, they use the same selector. So what I do now is just change the URL in 'edit metadata' and then click 'scrape', after it is finished, saving them using the store name as the file name manually. Hence, is there a way to do this step automatically? Maybe use Web Scraper itself or python or something else? Thanks.

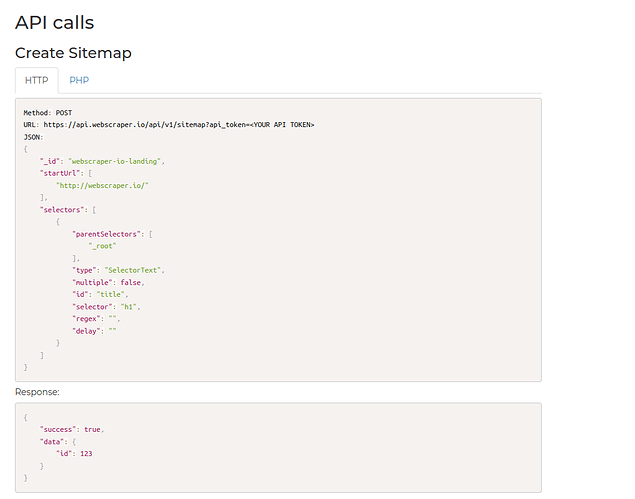

@Qiu Hi, If you are looking to automate the data delivery, this should be structured as follows:

- A notification endpoint is set up (in this case that would be the server you would like to receive the notification)

- Once a scraping job is finished, a notification is posted to your endpoint that a particular job has finished running. Example:

"scrapingjob_id": 1234

"status": "finished"

"sitemap_id": 12

"sitemap_name": "my-sitemap"

"custom_id": "custom-scraping-job-12"

- Once your server receives this, an API should be configured correctly on your end, that will execute a 'Download a scraping job' call (in a JSON or CSV format). This will retrieve the finished data and you will be able to handle this on your end accordingly.

Here is the documentation regarding webhooks and API: