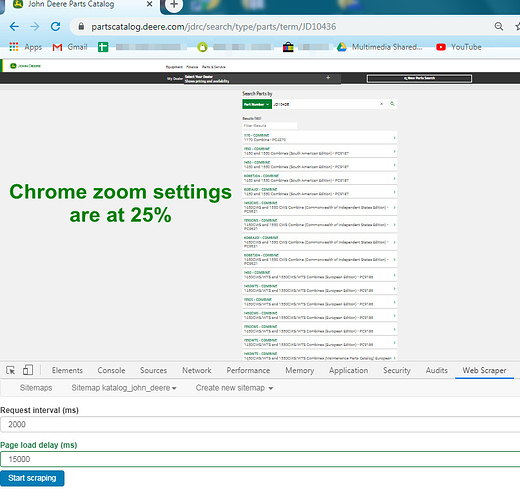

Describe the problem.

I'm trying to extract data (model and type of machine, green text only) from site with dynamic scrolling, but the webscraper do not scroll the page.

Can someone help me with this?

Url: https://partscatalog.deere.com/jdrc/search/type/parts/term/JD10436

Sitemap:

{"_id":"katalog_john_deere","startUrl":["https://partscatalog.deere.com/jdrc/search/type/parts/term/JD10436"],"selectors":[{"id":"Maszyna","type":"SelectorElementScroll","parentSelectors":["_root"],"selector":".linkList a","multiple":true,"delay":"2000"},{"id":"kod","type":"SelectorText","parentSelectors":["_root"],"selector":".linkList a","multiple":true,"regex":"","delay":0}]}