Hello all,

I am running into a problem on the website capterra.com. I am attempting to scrape the name and website url from each of the LMS providers in this page: https://www.capterra.com/learning-management-system-software/

My current map is setup like this: element selector > Company title (textselector) & Popuplink selector

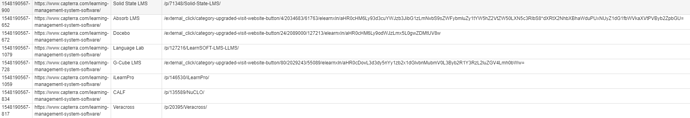

The issue I am running into is the scraper will open up every link in a new tab as if it was extracting the url from each, but at the end of the process, no data has been scraped. I have tried extending the delay time to 5000 ms but that does not seem to help. Any idea on how to proceed here?

sitemap: {"_id":"capterralms","startUrl":["https://www.capterra.com/learning-management-system-software/"],"selectors":[{"id":"element","type":"SelectorElement","parentSelectors":["_root"],"selector":"div.card","multiple":true,"delay":0},{"id":"Company name","type":"SelectorText","parentSelectors":["element"],"selector":"a.external-product-link","multiple":false,"regex":"","delay":0},{"id":"url","type":"SelectorPopupLink","parentSelectors":["element"],"selector":"a.button","multiple":false,"delay":"5000"}]}