Hi Guys,

I'm trying to scrape bits of data from different tabs and build a single CSV row of data - I kind of have it but it seems it split into 2 rows for some reason.

The idea is to create a single row that has the following columns:

- COL1 - Fund (Name of the Fund)

- COL2 - YTD (Year To Date from "&tab=1")

- COL3 - Risk (Risk Rating from "&tab=2")

I've setup the Link selectors for tab1 and 2 the same, but only 1 ends up on the same row in the CSV export.

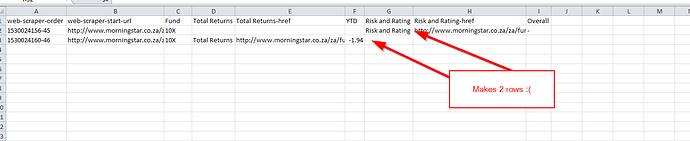

It exports as this:

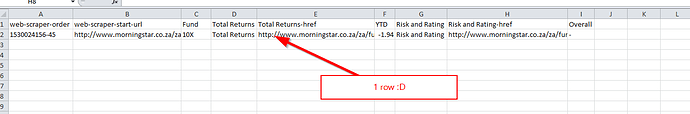

Trying to get it like this:

Any advice would be greatly appreciated!

Url:

http://www.morningstar.co.za/za/funds/snapshot/snapshot.aspx?id=F00000VCZN

Sitemap:

{"_id":"morningstar","startUrl":["http://www.morningstar.co.za/za/funds/snapshot/snapshot.aspx?id=F00000WSUW"],"selectors":[{"id":"Fund","type":"SelectorText","selector":"div#snapshotTitleDiv div.snapshotTitleBox h1","parentSelectors":["_root"],"multiple":false,"regex":"","delay":0},{"id":"Total Returns","type":"SelectorLink","selector":"div#snapshotTabNewDiv tr:nth-of-type(2) a.snapshotNavLinkText","parentSelectors":["_root"],"multiple":false,"delay":0},{"id":"YTD","type":"SelectorText","selector":"div.layout_content div#returnsTrailingDiv tr:nth-of-type(8) td.col2","parentSelectors":["Total Returns"],"multiple":false,"regex":"","delay":0},{"id":"Risk and Rating","type":"SelectorLink","selector":"div#snapshotTabNewDiv tr:nth-of-type(3) a.snapshotNavLinkText","parentSelectors":["_root"],"multiple":false,"delay":0},{"id":"Risk","type":"SelectorText","selector":"div.layout_content div#ratingRatingDiv td tr:nth-of-type(5) td.value:nth-of-type(3)","parentSelectors":["Risk and Rating"],"multiple":false,"regex":"","delay":0}]}

SOLVED

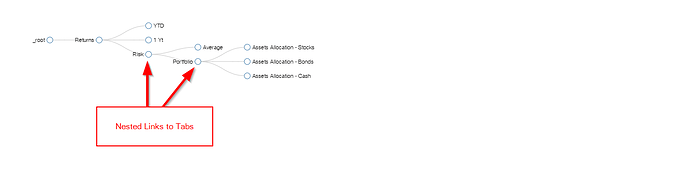

You just need to nest the links to the tabs.

Setup selectors for that page/tab, then create a link selector to the next tab, set up the selector for that tab then nest another link chaining everything together