wait for a page to load or continue from the point where left off

Hello. I need help with the scraper. I`m very new to this, this is my first scraping. I created a sitemap somehow, and it works fine. but my problem is in the site wich I try to scrap. this is not the greatest site and sometimes it takes pretty long for a page to load, and the scraper closes in the middle of scraping.

my question is, how to make the scraper wait for a page lo load when I don`t now how much it can take?

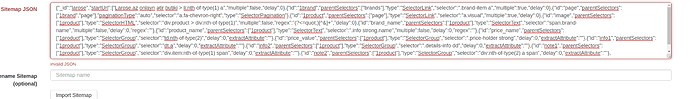

here is my sitemap

{"_id":"larose","startUrl":["Larose.az onlayn ətir butiki > li:nth-of-type(1) a","multiple":false,"delay":0},{"id":"1brand","parentSelectors":["brands"],"type":"SelectorLink","selector":".brand-item a","multiple":true,"delay":0},{"id":"page","parentSelectors":["1brand","page"],"paginationType":"auto","selector":"a.fa-chevron-right","type":"SelectorPagination"},{"id":"1product","parentSelectors":["page"],"type":"SelectorLink","selector":"a.visual","multiple":true,"delay":0},{"id":"image","parentSelectors":["1product"],"type":"SelectorHTML","selector":"div.product > div:nth-of-type(1)","multiple":false,"regex":"(?<=quot;)[^&]+","delay":0},{"id":"brand_name","parentSelectors":["1product"],"type":"SelectorText","selector":"span.brand-name","multiple":false,"delay":0,"regex":""},{"id":"product_name","parentSelectors":["1product"],"type":"SelectorText","selector":".info strong.name","multiple":false,"delay":0,"regex":""},{"id":"price_name","parentSelectors":["1product"],"type":"SelectorGroup","selector":"td:nth-of-type(2)","delay":0,"extractAttribute":""},{"id":"price_value","parentSelectors":["1product"],"type":"SelectorGroup","selector":".price-holder strong","delay":0,"extractAttribute":""},{"id":"info1","parentSelectors":["1product"],"type":"SelectorGroup","selector":"dt.a","delay":0,"extractAttribute":""},{"id":"info2","parentSelectors":["1product"],"type":"SelectorGroup","selector":".details-info dd","delay":0,"extractAttribute":""},{"id":"note1","parentSelectors":["1product"],"type":"SelectorGroup","selector":"div.item:nth-of-type(1) span","delay":0,"extractAttribute":""},{"id":"note2","parentSelectors":["1product"],"type":"SelectorGroup","selector":"div:nth-of-type(2) a span","delay":0,"extractAttribute":""},{"id":"note3","parentSelectors":["1product"],"type":"SelectorGroup","selector":"div.item:nth-of-type(3) span","delay":0,"extractAttribute":""}]}