Hello everyone,

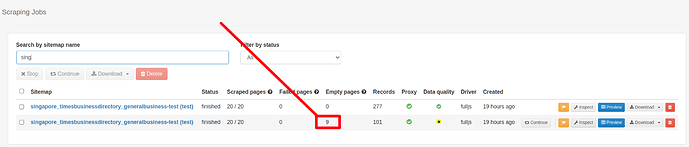

Currently I'm trying to do pagination, for normal website it work like a charm but for this website, it just go very random page and go to random link and scrape data without order. Any idea what this is?

Thank you.

Url: Company Listings - Singapore Business Directory

Sitemap:

{"_id":"singapore_timesbusinessdirectory_generalbusiness","startUrl":["https://www.timesbusinessdirectory.com/company-listings?page=[1-20]"],"selectors":[{"id":"company","parentSelectors":["_root"],"type":"SelectorLink","selector":"h3 a","multiple":true,"delay":0},{"id":"phone","parentSelectors":["company"],"type":"SelectorText","selector":".valuephone a","multiple":false,"delay":0,"regex":""},{"id":"email","parentSelectors":["company"],"type":"SelectorHTML","selector":"a#iconCompanyEmail","multiple":false,"regex":"[\\'\\\"][a-zA-Z0-9-_.]+\\@[a-zA-Z0-9-_]+\\.(\\w+.){1,2}[\\'\\\"]","delay":0},{"id":"website","parentSelectors":["company"],"type":"SelectorElementAttribute","selector":".valuewebsite a","multiple":false,"delay":0,"extractAttribute":"href"},{"id":"email_test","parentSelectors":["company"],"type":"SelectorHTML","selector":"a#iconCompanyEmail","multiple":false,"regex":"[a-zA-Z0-9-_.]+\\@[a-zA-Z0-9-_]+(\\.\\w+){1,2}","delay":0},{"id":"contact_individual","parentSelectors":["company"],"type":"SelectorText","selector":"p:nth-of-type(2)","multiple":false,"delay":0,"regex":""},{"id":"categories","parentSelectors":["company"],"type":"SelectorText","selector":"div:nth-of-type(6) div.company-description","multiple":false,"delay":0,"regex":""}]}