Using LinkedIn.com...Recruiter Search. It has pagination and on each page that displays 25 candidates, the candidates are only loaded when scrolled into view. I don't think giving you the URL will help as you will need to logon in order to see the actual data.

I don't know how to use ElementScroll in conjunction with ElementClick in order to make this work.

The ElementClick clickElementSelector is ".mini-pagination__quick-link [type='chevron-right-icon']". And this works just fine.

The candidates are inside a HTML List construct 'ol'.

The unloaded li candidates have "li.profile-list__occlusion-area" selector; and the li element is empty. Once scrolled into view, the li element contains "div data-test-paginated-list-item"; among many other items.

Inside the candidate li items, for test purposes, I just want to grab the Name with selector "artdeco-entity-lockup-title a".

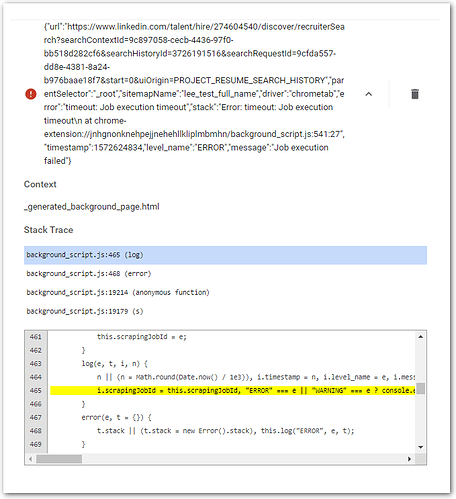

I have been trying so many permutations of ElementClick and ElementScroll and parenting without 100% luck. Can someone please help me to understand how to add ElementClick, ElementScroll, and SelectorText in order to page through 6 pages of candidates under these circumstances? I don't know the parenting hierarchy nor how the other parameters should be set for each.