Hello, dear experts here,

I have used webcraper for months to further process table data from my HomeBanking website. This has not been possible since the beginning of this year. In the preview, webcraper shows me the correct selection of data, but only the header (in this case line 11) is always included in the exported CSV or XLSX files.

This problem has been described several times here but I couldn't find a solution.

Relevant information:

FF version up-to-date, webcraper de- and newly installed, suspicious FF extensions (including advertising blockers) deactivated.

Webscraper-sitemaps for 3 other Internet websites (including the training website) created.

Everything repeated with webcraper on EDGE (without extensions) - always the same result.

What's ongoing here?

I am grateful for help

Hi,

Can I assume the relevant website is not publically accessible?

Does the data appear correctly in the pop-up scraper window?

Hello,

Your both questions I've to answer with yes.

Now I was looking for a non-protected website with table data. Even here I've to face the described problem. The sitemap is here:

{"_id":"Kalorientabelle","startUrl":["https://www.kalorientabelle.net/kalorien/obst"],"selectors":[{"columns":[{"extract":true,"header":"Lebensmittel","name":"Lebensmittel"},{"extract":true,"header":"Portionsgröße","name":"Portionsgröße"},{"extract":true,"header":"Kalorien","name":"Kalorien"},{"extract":true,"header":"Portionsgröße","name":"Portionsgröße"},{"extract":true,"header":"Kalorien","name":"Kalorien"}],"id":"ObstKalorien","multiple":true,"parentSelectors":["_root"],"selector":"table","tableDataRowSelector":"tr.MuiTableRow-hover","tableHeaderRowSelector":"tr.MuiTableRow-head","type":"SelectorTable"}]}

Could that be helpful?

thanks for replaying. The data preview window shows the table rows C, D, E.

the result of xlsx-export is as follows:

the csv-File contains:

I am still excited to hear from you

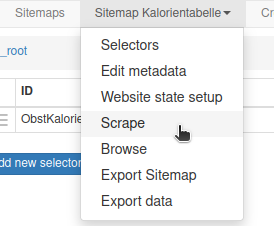

Just to be sure, you are executing the scrape by clicking 'Sitemap - Scrape' before downloading the data file?

interesting question. In my previous tests, I already had used both variants - only "Export data" alone and the combination of "Scrape" + "Export data" tried out. No difference in the result.

Now I have applied this combination to the calories table and actually get a complete result as an xlsx file.

However, the same procedure now on my home banking page only produces an almost empty spreadsheet. And as a reminder: Originally, it worked perfectly there.

One more hint: Other users here in the forum also wrote: "...nothing changed, and suddenly it worked again".

Mysterious.

Ok, I see. You should always click Scrape as this is the step where the data is collected. After that, a pop-up window should appear where the sitemap executes the scraping steps.

There is probably an error somewhere in the HomeBanking sitemap but, unfortunately, I cannot help you with that without being able to inspect the HTML of the website.

I think (and hope) that I could solve the problem of the empty table now. The crucial point was the change in the default values for

Request interval (MS) = 2000

Page Load Delay (MS) = 2000. I set both values to 10000 - and the table is completely there.

@JanAp: Thank you for your patience and for thinking along with me.