hi, i'd like to scrape the data of each restaurant of an italian region. the problem is that for a single region there are up to 90 pages and i want to limit the scraping to the first 10 pages and i don't know how i can achieve this result.

i tried with the negative nt-child ranges but didn't work

Furthermore i can't retrieve the coordinates of the restaurant from its detail page.

Could you help me?

Url: https://www.tripadvisor.it/Restaurants-g2440596-Province_of_Palermo_Sicily.html#EATERY_OVERVIEW_BOX

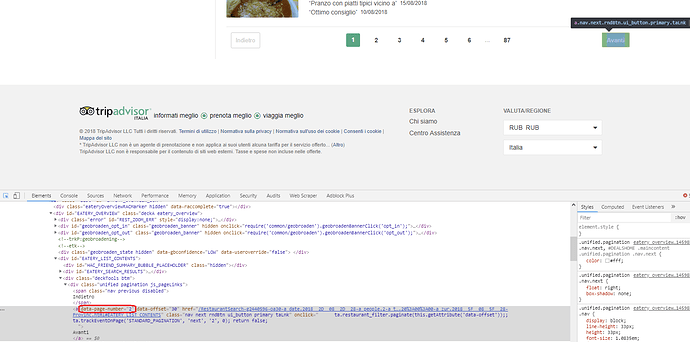

this is my sitemap

{"_id":"prova_limite_pagine","startUrl":["https://www.tripadvisor.it/Restaurants-g2440596-Province_of_Palermo_Sicily.html#EATERY_OVERVIEW_BOX"],"selectors":[{"id":"pagina","type":"SelectorElement","parentSelectors":["_root","avanti"],"selector":"div.ui_columns.is-partitioned > div.ui_column.is-9","multiple":false,"delay":"1.5"},{"id":"apriLink","type":"SelectorLink","parentSelectors":["pagina"],"selector":"a.property_title","multiple":true,"delay":0},{"id":"nome","type":"SelectorText","parentSelectors":["apriLink"],"selector":"h1.heading_title","multiple":false,"regex":"","delay":0},{"id":"telefono","type":"SelectorText","parentSelectors":["apriLink"],"selector":"div.blEntry.phone span:nth-of-type(2)","multiple":false,"regex":"","delay":0},{"id":"avanti","type":"SelectorElementClick","parentSelectors":["pagina","avanti"],"selector":"div.pageNumbers","multiple":true,"delay":"1","clickElementSelector":"a.pageNum:nth-child(-n+3)","clickType":"clickOnce","discardInitialElements":false,"clickElementUniquenessType":"uniqueText"},{"id":"indirizzo","type":"SelectorText","parentSelectors":["apriLink"],"selector":"div.blEntry span.street-address","multiple":false,"regex":"","delay":0},{"id":"regione","type":"SelectorText","parentSelectors":["apriLink"],"selector":"div.blEntry span.locality","multiple":false,"regex":"","delay":0},{"id":"fascia_prezzo","type":"SelectorText","parentSelectors":["apriLink"],"selector":"span.header_tags","multiple":false,"regex":"","delay":0},{"id":"tipo_cucina1","type":"SelectorText","parentSelectors":["apriLink"],"selector":"span.header_links a:nth-of-type(1)","multiple":false,"regex":"","delay":0},{"id":"tipo_cucina2","type":"SelectorText","parentSelectors":["apriLink"],"selector":"span.header_links a:nth-of-type(2)","multiple":false,"regex":"","delay":0},{"id":"lat","type":"SelectorElementAttribute","parentSelectors":["apriLink"],"selector":"div.mapContainer","multiple":false,"extractAttribute":"data-lat","delay":"2"},{"id":"lon","type":"SelectorElementAttribute","parentSelectors":["apriLink"],"selector":"div.mapContainer","multiple":false,"extractAttribute":"data-lng","delay":"2"},{"id":"valutazione","type":"SelectorElementAttribute","parentSelectors":["apriLink"],"selector":"div.rs span.ui_bubble_rating","multiple":false,"extractAttribute":"content","delay":0},{"id":"recensioni","type":"SelectorHTML","parentSelectors":["apriLink"],"selector":"a.more span","multiple":false,"regex":"","delay":0}]}